Hardware secure enclaves are increasingly used to run complex applications. Unfortunately, existing and emerging enclave architectures do not allow secure and efficient implementation of custom page fault handlers. This limitation impedes in-enclave use of secure memory-mapped files and prevents

extensions of the application memory layer commonly used in untrusted systems, such as transparent memory compression

or access to remote memory.

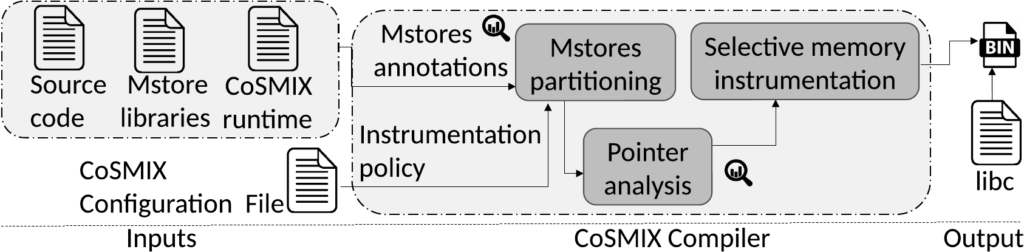

We propose a novel memory store abstraction that allows the implementation of application-level secure page fault handlers. To that end, we prototype CoSMIX, a compiler that instruments selective application memory accesses to use one or more memory stores, guided by a global instrumentation policy or code annotations without changing application code. Our prototype runs on Intel SGX enclaves and is compatible with popular SGX execution environments, including SCONE, Anjuna, and Graphene. We show that with memory stores, it is easy to achieve better performance, functionality, and security for enclaves by just recompiling the application with specific memory stores.

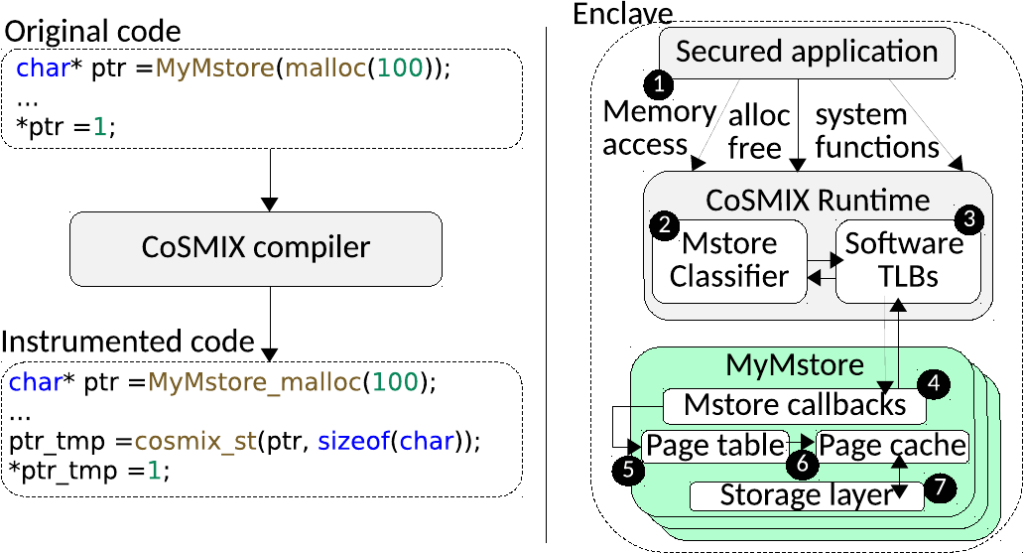

The CoSMIX compiler first analyzes the original code of the application and automatically instrument only the requested memory ranges. Therefore, CoSMIX ensures the memory ranges maintain the requested semantics as described by the memory stores.

Note, the instrumented code invokes CoSMIX runtime that provides common optimizations to reduce instrumentation overheads before invoking the correct memory store functionality. For example, CoSMIX can return a cached result when the memory store supports caching as described below which significantly reduces overhead due to memory store invocation.

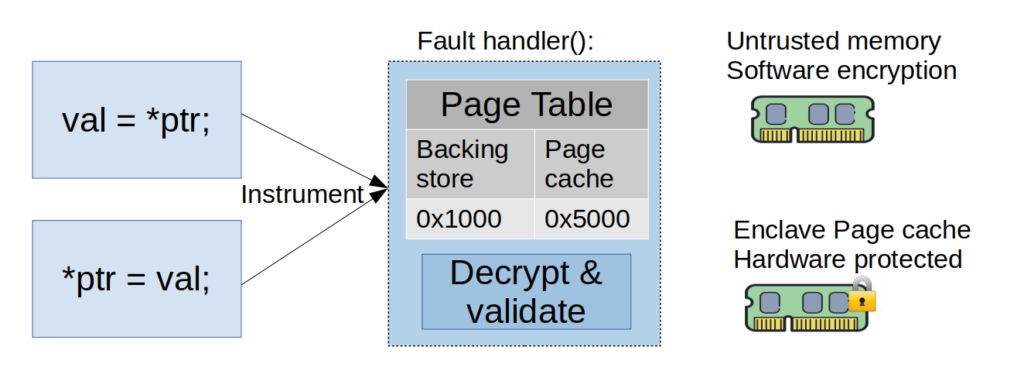

At a high level, a memory store or mstore in short implements another layer of virtual memory on top of an abstract storage layer. A mstore operates on pages and keeps track of the page-to-data mappings in an internal page table. When an application accesses memory, the runtime invokes the mstore’s software page fault handler, retrieves the contents (e.g., for the secure mmap mstore, it would read data from a file and decrypt), and makes it accessible to the application.

A cached mstore maintains its own page cache to reduce accesses to the storage layer, whereas a direct-access mstore does not cache the contents. To override memory semantics with custom ones, CoSMIX simply invokes an appropriate mstore callback as described below. Effectively, the generality of mstores allows this interface to be extremely slim and allows developers to focus on the custom functionality they wish to implement for each memory access.

| Callback | Purpose |

| mstore_init(params)/mstore_release() void* alloc(size_t s, void* priv_data)/free(void* ptr) size_t alloc_size(void* ptr) size_t get_mpage_size() |

Initialize/tear down Allocate/free buffer Allocation size Get the size of the mpage |

| Direct-access | |

| mpf_handler_d(void* ptr, void* dst, size_t s) write_back(void* ptr, void* src, size_t size) |

mpage fault on access to ptr, store the value in dst Write back value in src to ptr |

| Cached | |

| void* mpf_handler_c(void* ptr) flush(void* ptr, size_t size) get_mstorage_base()/get_mpage_cache_base() notify_tlb_cached(void* ptr) / notify_tlb_dropped(void* ptr, bool dirty) |

mpage fault on access to ptr, return pointer to mpage Write the mpages in the range ptr:ptr+size to mstore Gets the base address of mstorage/mpage cache The runtime cached/dropped the ptr translation in its TLB |

Next, we show how we are able to build three custom memory stores that provide different functionality for enclaves and use cases where this functionality may be useful for some applications.

Enclave page faults occur when the enclave accesses pages that are not resident. For example, due to exceeding the available physical memory allocated for the enclave. However, unlike native applications, SGX enclaves have a very limited physical memory of about ~94MB and the cost of page faults is much higher as it is performed by the untrusted operating system. That is, each page fault first exits the enclave, performs the page faults, and then re-enters the enclave. This effectively results in high direct and indirect performance costs.

To that end, we propose using in-enclave paging with a  Secure User-managed Virtual Memory (SUVM) abstraction. SUVM allows exit-less memory paging for enclaves by keeping a page table, page cache, and a fault handler as part of the enclave. SUVM fits cleanly to cached memory-backed mstore. This mstore has its own page table and page cache in the enclave. The mstore’s alloc function returns a pointer to the storage layer in untrusted memory. Upon mpf_handler_c invocation, the mstore checks whether the needed page is already cached in the page table. If not, it reads the page’s contents from the storage layer, decrypts and verifies its integrity using a signature maintained securely inside the enclave for every page, and finally copies it to the page in the page cache. Subsequent accesses to the page can be served seamlessly from the page cache. When the page cache is full, the mstore evicts pages back into the untrusted memory storage layer.

Secure User-managed Virtual Memory (SUVM) abstraction. SUVM allows exit-less memory paging for enclaves by keeping a page table, page cache, and a fault handler as part of the enclave. SUVM fits cleanly to cached memory-backed mstore. This mstore has its own page table and page cache in the enclave. The mstore’s alloc function returns a pointer to the storage layer in untrusted memory. Upon mpf_handler_c invocation, the mstore checks whether the needed page is already cached in the page table. If not, it reads the page’s contents from the storage layer, decrypts and verifies its integrity using a signature maintained securely inside the enclave for every page, and finally copies it to the page in the page cache. Subsequent accesses to the page can be served seamlessly from the page cache. When the page cache is full, the mstore evicts pages back into the untrusted memory storage layer.

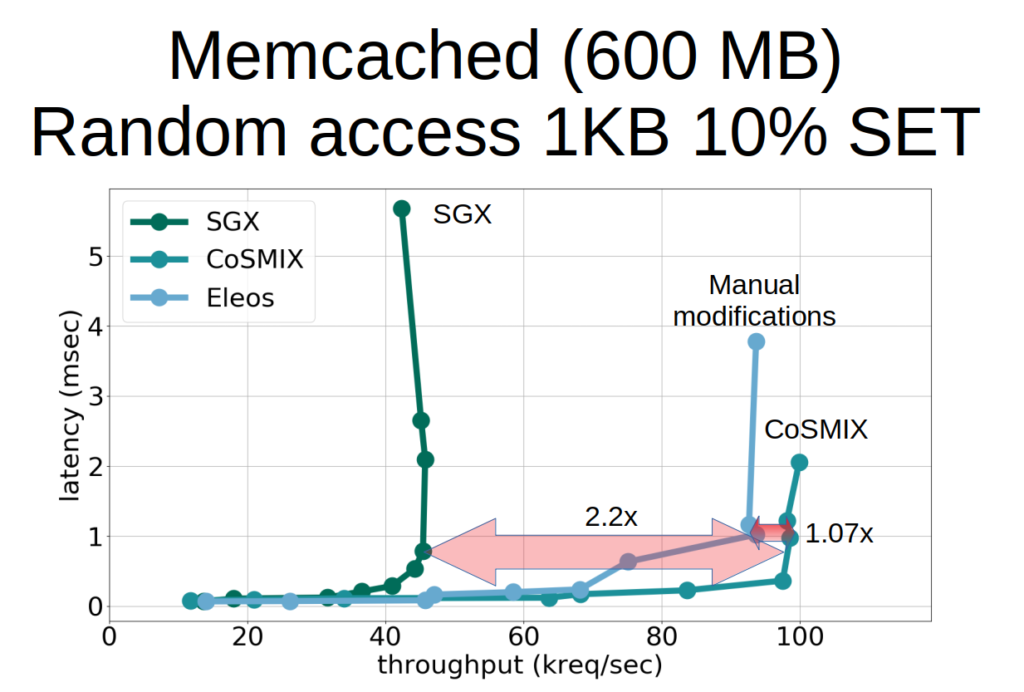

To showcase the performance improvement of avoiding costly enclave transitions by using SUVM we measured the observed throughput for the Memcached key-value. We load Memcached items such that it stores more than 6x the available physical enclave memory. We then issue random 1KB requests with a 10% SET and 90% GET ratio. We measure the throughput and latency and see that our use of SUVM to avoid costly enclave transitions improves performance by more than 2x. Furthermore, using CoSMIX we are able to outperform a manually modified version of Memcached that uses SUVM due to the aggressive optimizations it uses.

The mstore abstraction is powerful as it allows the use of memory-mapped encrypted and integrity-protected files in enclaves much like the mmap system call. This is impossible in enclaves that do not support a generic secure page fault mechanism as the operating system, which is part of the page fault handling is not trusted. Supporting this new functionality is possible similarly to SUVM, except the storage layer is backed by a file instead of anonymous memory.

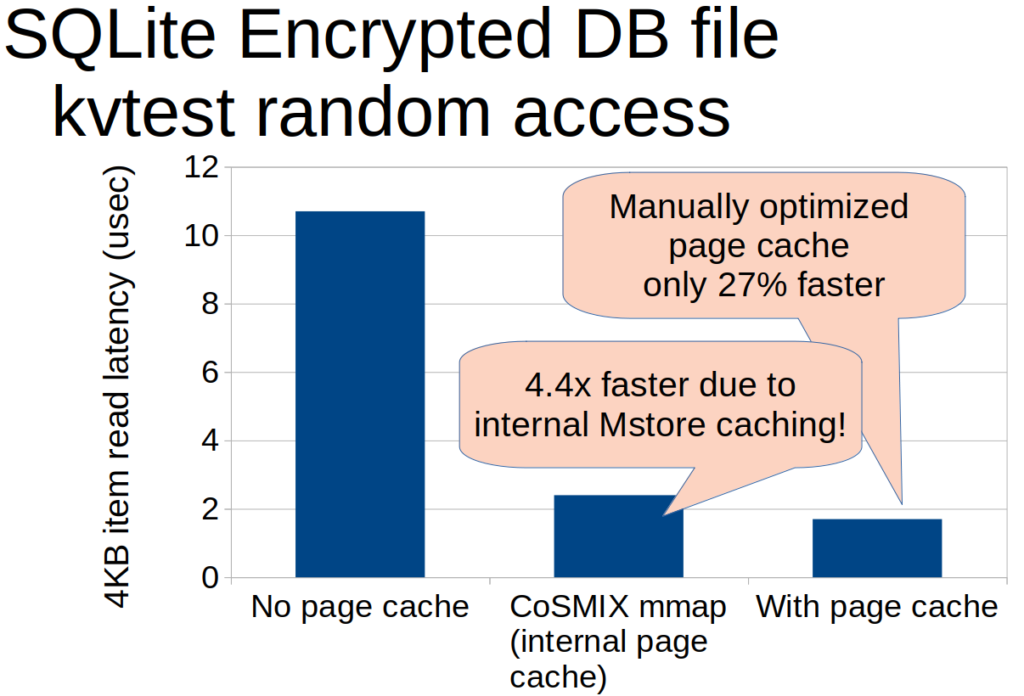

To demonstrate the usefulness of the abstraction we use the popular SQLite database. SQLite uses mmap for fast random access latency to the database file. We compare our secure file mapping mstore to regular read() system calls performed by SQLite, both with no cache (all requests are made to the storage) and while caching the entire database file (all requests are served from the enclave’s memory). Using the file mapping mstore has internal caching that is unrelated to the SQLite application. Therefore, even application without implemented caches can benefit from it (4.4x improved access latency measured). Furthermore, accesses through the mstore are only 27% slower compared to SQLite specifically optimized cache for reading database files. This demonstrates the low instrumentation overheads of our approach.

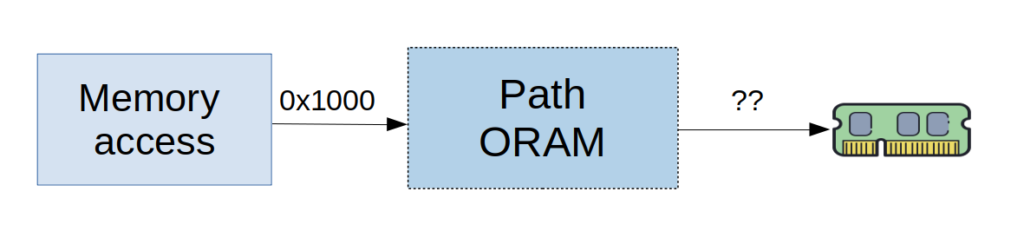

ORAM obfuscates memory access patterns by shuffling and re-encrypting the data upon every data access. This allows protection against memory-related side-channel attacks, such as the page fault side-channel attack that enclaves are known to be vulnerable against. For example, if the enclave accesses address 0x1000, with ORAM access semantics an attacker cannot infer the actual accesses address, nor whether it was a read or write access.

To support ORAM, we use the direct-access mstore (since caching, and serving from a cache would reveal the access pattern). We use the PathORAM algorithm and the mstore simply directs all requests to the storage layer than is part of the enclave’s memory. However, all requests are automatically made oblivious by using ORAM.

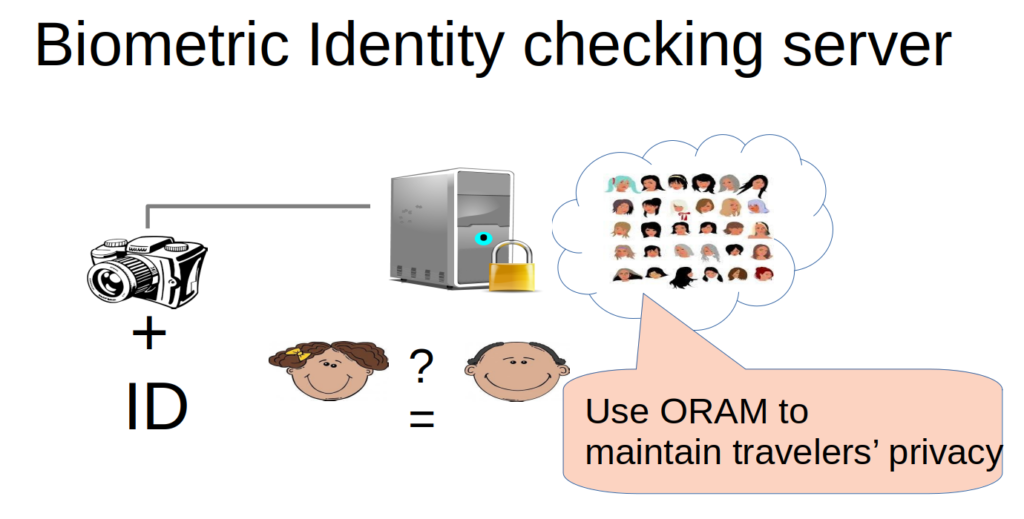

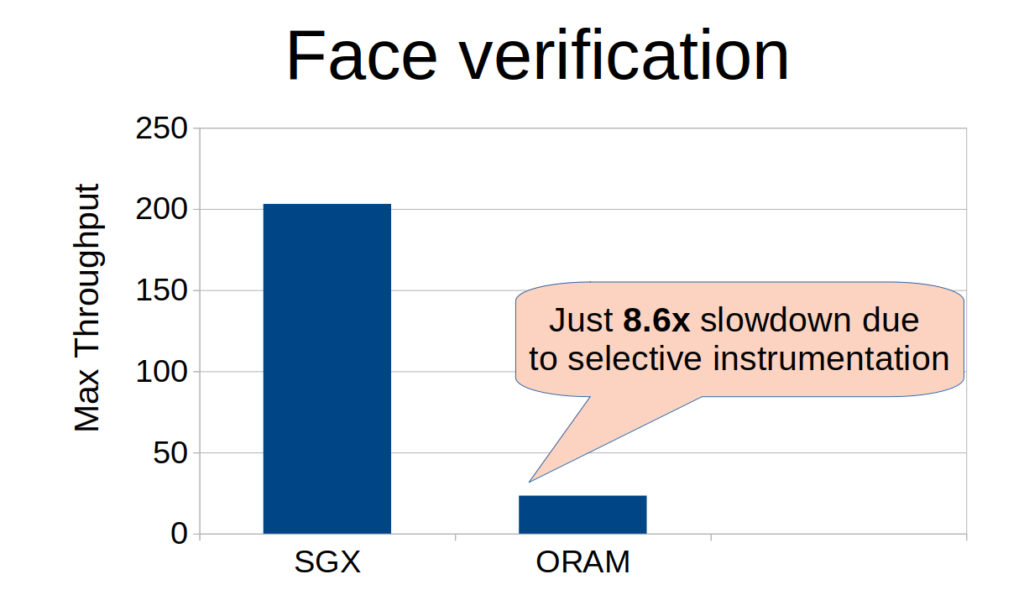

Next, we show how using ORAM can protect a face verification server that validates face images and IDs to a biometric database. This is usually used in airports to validate travelers’ identities, so accessing this sensitive information oblivious will maintain travelers’ privacy. While ORAM is known to have an order-of-magnitude impact on performance, we observe just an 8.6x lower throughput of the server when using ORAM. The reason is that with our approach of CoSMIX, not all memory accesses should be made oblivious, but only the accesses to the sensitive biometric database.

We implemented CoSMIX as an LLVM IR module pass. The runtime and mstores are coded with C/C++ and creating a new mstore is especially simple since it hides the internal details of the compiler and memory management. Effectively, developers can focus on the functionality they wish to extend enclaves instead of the mechanisms.

This project was performed in collaboration with Yan Michalevsky (Anjuna Security) and Christof Fetzer (TU Dresden)